In this post I’m going to share my experiences with tuning slow SQL queries in Java web applications. Following these 5 super-easy steps you’ll have a clear understanding on the performance bottlenecks in sql database and the indexes you’ll need to add to improve performance of your queries.

In this post I’m going to share my experiences with tuning slow SQL queries in Java web applications. Following these 5 super-easy steps you’ll have a clear understanding on the performance bottlenecks in sql database and the indexes you’ll need to add to improve performance of your queries.

1. Identify slow queries

JavaMelody is an open-source Java-based performance monitoring tool (https://code.google.com/p/javamelody/). It adds a monitoring dashboard to our web application where it provides extensive stats on the application performance, including SQL statement execution times and hit counts.

After Java Melody is set up, let’s go to the Statistics SQL section on the monitoring page and click on the Details link on the right to bring up the stats. Let’s click on the ‘% of cumulative time’ column to make sure the table is ordered by that column. Now let’s take the SQL statements with the highest percentage values and figure out how to improve them.

2. Analyze slow queries

Once a SQL query is selected, copy and paste them into your favourite SQL Editor (make sure to replace the question marks with actual values). Before executing it manually in Postgres, append the EXPLAIN ANALYZE keywords in front of the query. For example:

EXPLAIN ANALYZE select … from … join … join … where … order by …; |

The output will look similar to this:

Nested Loop Left Join (cost=1.720..11.310 rows=1 width=4955) (actual time=0.160..0.174 rows=1 loops=1)

Join Filter: (victor_seven0kilo_oscar.three = charlie_lima8kilo_oscar.quebec)

Rows Removed by Join Filter: 7

-> Nested Loop Left Join (cost=1.720..10.150 rows=1 width=4758) (actual time=0.144..0.158 rows=1 loops=1)

Join Filter: (six_seven6kilo_oscar.six_kilo = foxtrot_mike7kilo_oscar.quebec)

-> Nested Loop Left Join (cost=1.720..9.130 rows=1 width=3717) (actual time=0.139..0.153 rows=1 loops=1)

-> Nested Loop Left Join (cost=1.590..8.220 rows=1 width=3185) (actual time=0.124..0.136 rows=1 loops=1)

Join Filter: (victor_seven0kilo_oscar.four = charlie_lima4kilo_oscar.quebec)

Rows Removed by Join Filter: 6

-> Nested Loop Left Join (cost=1.590..7.060 rows=1 width=2988) (actual time=0.113..0.122 rows=1 loops=1)

Join Filter: (charlie_lima1kilo_oscar.oscar_juliet = romeo3kilo_oscar.quebec)

Rows Removed by Join Filter: 4

-> Nested Loop Left Join (cost=1.590..6.000 rows=1 width=1431) (actual time=0.106..0.115 rows=1 loops=1)

Join Filter: (charlie_lima1kilo_oscar.six_kilo = foxtrot_mike2kilo_oscar.quebec)

Rows Removed by Join Filter: 1

-> Nested Loop Left Join (cost=1.590..4.970 rows=1 width=390) (actual time=0.099..0.108 rows=1 loops=1)

Join Filter: (victor_seven0kilo_oscar.papa = charlie_lima1kilo_oscar.quebec)

Rows Removed by Join Filter: 7

-> Hash Join (cost=1.590..3.820 rows=1 width=193) (actual time=0.079..0.088 rows=1 loops=1)

Hash Cond: (whiskey5kilo_oscar.quebec = victor_seven0kilo_oscar.alpha)

-> Seq Scan on whiskey kilo_two (cost=0.000..2.160 rows=16 width=119) (actual time=0.009..0.029 rows=16 loops=1)

-> Hash (cost=1.580..1.580 rows=1 width=74) (actual time=0.033..0.033 rows=1 loops=1)

Buckets: 1024 Batches: 1 Memory Usage: 1kB

-> Seq Scan on victor_seven echo (cost=0.000..1.580 rows=1 width=74) (actual time=0.028..0.028 rows=1 loops=1)

Filter: (quebec = 705)

Rows Removed by Filter: 45

-> Seq Scan on seven_romeo uniform (cost=0.000..1.070 rows=7 width=197) (actual time=0.007..0.013 rows=7 loops=1)

-> Seq Scan on foxtrot_mike foxtrot_two (cost=0.000..1.010 rows=1 width=1041) (actual time=0.003..0.003 rows=1 loops=1)

-> Seq Scan on juliet seven_golf (cost=0.000..1.030 rows=3 width=1557) (actual time=0.002..0.003 rows=4 loops=1)

-> Seq Scan on seven_romeo charlie_bravo (cost=0.000..1.070 rows=7 width=197) (actual time=0.003..0.009 rows=7 loops=1)

-> Index Scan using charlie_echo on six_seven victor_three (cost=0.130..0.900 rows=1 width=532) (actual time=0.012..0.013 rows=1 loops=1)

Index Cond: (whiskey5kilo_oscar.six_india = quebec)

-> Seq Scan on foxtrot_mike yankee (cost=0.000..1.010 rows=1 width=1041) (actual time=0.002..0.002 rows=1 loops=1)

-> Seq Scan on seven_romeo oscar_echo (cost=0.000..1.070 rows=7 width=197) (actual time=0.004..0.008 rows=7 loops=1)

3. Evaluate results of explain plan

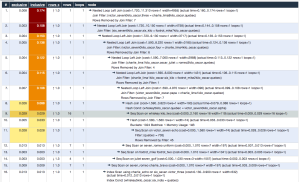

Now copy the result and paste it to http://explain.depesz.com/ and you’ll get something similar to below table. From this table the most important parts are the ‘exclusive’ and the ‘rows’ columns. Values in the exclusive column tell the time it took to perform the join described in the node column. Values in the rows column tell the number of rows the query had to process to perform the join.

4. Add necessary indexes

Once we identified the joins with the highest exclusive times and the highest row count, we’re ready to add necessary indexes:

create index victor_seven0kilo_oscar_alph_idx on victor_seven0kilo_oscar (alpha); |

5. Verify changes

Verify the actual performance improvements by repeating steps #2 and #3.

If you feel you got stuck, drop a comment and I’d be happy to help you out.